Bit of a longer post this one as this took me ages to get working and involved me having to try to learn lots of new stuff to the level where i could make it work.....(without really truly understanding a lot of it - so if there are mistakes don't be surprised)

So what was i trying to do? - well we still receive some files from external 3rd parties via ftp, these should of course all of been replaced many years ago, ftp isn't very good and not very secure - we all know this - but apathy and laziness (and dependencies on 3rd parties actually doing something) has meant that we still have these (and realistically i'm sure a lot of companies who are not start ups have the same situation.

I really hoped this problem had already been solved by Microsoft and there would be a simple PaaS ftp service on offer in Azure - this isn't there and i think Microsoft have either missed a trick or they don't want to be promoting archaic ways of doing things - personally i think they are missing out on a revenue stream here - this would be pretty easy to do and would likely be quite popular.

So in the absence of that what can we do - i really don't want to have to run a server in Azure just to host an ftp server on a public ip and i don't want to hook that up anyway to the rest of my network as it's exposing quite a security risk.

Well one solution (as this is Gyorgy's idea not mine - he's the brains behind this) is to host a docker container running an ftp server in one of the container service offerings from Microsoft. If we can back this with Azure file storage we can have a persistent easily accessible file store for ftp that does not have to be hooked up to the other networking components we have in Azure - it gives us nice isolation and minimizes the amount of management we have to do.

We initially looked at the older container instances offering - this would have been suitable but has a major drawback - it's not possible to have a fixed public ip address - for some 3rd parties this would be a problem as they would have to open up outgoing firewalls to this - if it's not static then it won't work

With that in mind this really left one obvious solution AKS (or kubernetes services as it seems to now display as in the portal).

I'm not going to try and explain containers/docker/kubernetes as i don't understand it well enough to be able to make it look like i know what I'm talking about - the following steps will just explain what i did to get the setup working - the deeper understanding i still have to get (and I'm assuming many of you do too - it seems however that this kind of architecture really is going to dominate things for the next few years)

OK - so lets get to it.

First thing we have to do is order AKS (now this screen changed half way through me setting this up and added loads of extra options - i'm ignoring most of them for this as it's not required)

First we select some basic - they are all pretty self explanatory - i just made sure to change the machine size to the smallest available as this can;t be done afterwards and i want to keep costs to a minimum (the estimated cost is about 8€/month for a B1)

Now i could have just clicked review and create at this stage as i'll just take defaults for everything else - but just to show a couple of the new options here are some extra screenshots - the main one below if the one that seems to allow direct VNET integration to existing networks - we're very interested in this one for other applications.

It also allows direct OMS integration - done for you if you want it

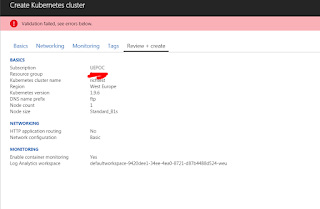

Now at this point i clicked review and create and it failed as shown below

After some checking i found that choosing an existing resource group seemed to work but creating a new one didn't....don't know why this would be perhaps a glitch in this as it's still a preview feature.

At this point everything looked good and i clicked create - after a few minutes i got this....

Now after digging into this it is caused by a naming policy we have set for resource groups - it seems that the deployment doesn't put everything into the resource group i chose (thanks for that) - it actually creates a 2nd one called MC_{resource group name i picked} and wants to put stuff in there - this violates my naming so the policy stops the deployment from completing fully.

The problem is the naming policy is quite strict (mainly because there is very little flexibility in how this can currently be defined - another preview feature :-)). The only way round this is to temporarily disable the policy - as we can't exclude this specific RG as it doesn't exist yet and the process wants to create it......

So after disabling the policy i tried again

And it failed again (after about 90 minutes.....) - after trying various different ways of ordering this i finally got it to work by letting the server size default and not activating the OMS monitoring feature - not sure which one fixed it but anyway - here's what you see when it does finally work

Which looks like this in the normal kubernetes screen - so finally progress (and this should have been the easy bit....)

Now we have this AKS 'thing' we have to now deploy an ftp container to it that is somehow linked to azure file storage.......

As I'm going to need it later on i'll now create a new storage account and a file share within that - this will be used as the back end file store for the actual ftp files uploaded. The simple steps to do that are:

1. Create the account

2. Add a file share

3. Navigate and find the storage access keys which we'll need in a bit

I'm going to do all of this from bash in cloud shell (no local software required) - to do this i can get a full screen version of cloud shell - i just navigate to https://shell.azure.com

Once there i need to configure my session to be talking to the AKS i just created - to do that i run

az aks get-credentials --resource-group rg-name-i-chose-here --name aks-name-i-chose-here

And to confirm i'm talking to it ok - here is some basic command output

So now I'm ready to do all the kubernetes steps - i'll describe them now in the order it should be done to get this working (this wasn't the order i originally had most things in and trial and error got me here....).

The first thing we need is a kubernetes secret to hold the storage account key and name for later use (i think this is possible to store in a key vault rather than directly in a kubernetes secret but I didn't figure out how to do that so far).

The secrets have to be encoded in base64 - the easiest way to find the encoded version is to use an online tool - i used the one below (there are loads of others).

https://www.base64decode.org/

If you navigate there and enter the value you want to encode and grab the output for both the storage account name (in my case ftpuk5) and the key (i used key1 but key2 could also be used).

Now we have these encoded values we need to create a secret containing them - this is done using the kubectl command passing in a yaml formatted file with the secret in.

(side note here - whoever created yaml is a sadist.....)

here is my yaml file to do that (note formatting is crucial in all of these files)

apiVersion: v1 kind: Secret metadata: name: azurefile-secret type: Opaque data: azurestorageaccountname: ZnRwdWs1 azurestorageaccountkey: base64stringhere

To now actually create this i run

kubectl create -f ftp5secret.yaml

The next stage is to then add a storage class - i only had to do this as it seems there is a feature that the 'easy' way of doing azure files mounting won't let you change permissions and this workaround seems to be the only way of doing it - unfortunately makes the process a lot more complicated.

So again i create a yaml file for this - in it i refer to the storage account created earlier

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: rich-storage annotations: labels: kubernetes.io/cluster-service: 'true' provisioner: kubernetes.io/azure-file parameters: storageAccount: 'ftpuk5' reclaimPolicy: 'Retain'

kubectl create -f ftp5storage.yaml

Now i have to create a persistent volume based on the storage class i just created and pointing at the azure file storage i created earlier - note the key part here is that i can change permissions on it - which makes the process work later on.

apiVersion: "v1" kind: "PersistentVolume" metadata: name: pvc-azurefile spec: capacity: storage: "5Gi" accessModes: - "ReadWriteMany" storageClassName: rich-storage azureFile: secretName: azurefile-secret shareName: ftp readOnly: false mountOptions: - dir_mode=0777 - file_mode=0777 - uid=1000 - gid=1000

Now (and bear with me - i know it's a lot of yaml so far) we have to create a 'persistent volume claim' - this seems to be some sort of lock/notice of interest in the volume created in the earlier step

apiVersion: "v1" kind: "PersistentVolumeClaim" metadata: name: "pvc-ftp" spec: storageClassName: rich-storage accessModes: - "ReadWriteMany" resources: requests: storage: "5Gi" volumeName: "pvc-azurefile"

Right nearly there - now we actually create a yaml file that uses a container from docker which has a prebuilt pure-ftpd daemon which seems to be in a very locked down and secured state by default - i'm using this one as it seemed to be the best fit - but you could use others or build your own

https://hub.docker.com/r/stilliard/pure-ftpd/

The yaml for the actual application then looks like this

apiVersion: apps/v1beta1 kind: Deployment metadata: name: ftp5-deployment spec: template: metadata: labels: app: ftpuk spec: hostNetwork: true containers: - name: ftpuk image: stilliard/pure-ftpd:hardened ports: - containerPort: 21 volumeMounts: - name: azurefileshare mountPath: /home/ftpusers/ volumes: - name: azurefileshare persistentVolumeClaim: claimName: pvc-ftp

If i run that in the app is created

kubectl create -f ftp5ftp.yaml

At this point i can connect into the 'pod' running this and can see that the azure file storage is mounted ok - to check this we can do the following:

kubectl get pods

This returns the pod name that we can then connect to

NAME READY STATUS RESTARTS AGE

ftp5-deployment-6c6d6586d9-bscdf 1/1 Running 0 46m

to connect to this we use the following syntax

kubectl exec -it ftp5-deployment-6c6d6586d9-bscdf /bin/bash

If i then do a couple of basic commands inside this bash shell - i can see the storage and the permissions on it

So we can see it's owner by ftpuser:ftpgroup - this is vital for the steps later on.

At this moment the app is not exposed outside of the isolated AKS environment - ie. it's just running in the 10.x subnet - so there is no way for the outside world to communicate with it.

So lets change that bit - here i want to always have a fixed public ip - so to do this lets create one and then reference that in the kubernetes environment.

An important note at this point is that this public ip has to be in the 'infrastructure resource group' that AKS created - and not the one you created in the very first step. This is the dynamic one created by AKS itself during the provisioning and is where the actual host VM's are located. If it's not in the same RG then it can't see it and the public ip will still create but then later be unusable by AKS.

To find the infra resource group name you can navigate to here

It always seems to be prefixed MC_ and be made up of the RG name you chose + the aks name.

To mix things up a bit lets just create the public ip via the az command line rather than the gui portal

thats run like this

az network public-ip create --resource-group MC_rgnamefromscreenshot --name ftpuk5pip --allocation-method static

This returns a whole load of json about what it created - part of which is the ip - if i just want the ip and none of the other stuff i can run

az network public-ip list --resource-group MC_rgnamefromscreenshot --query [0].ipAddress --output tsv

which just returns x.x.x.x

OK now we have that fixed ip we need to create an external load balancer item in kubernetes that is linked to my ftp deployment - so lets create some more yaml for that

apiVersion: v1 kind: Service metadata: name: ftp-front spec: loadBalancerIP: x.x.x.x type: LoadBalancer ports: - port: 21 selector: app: ftpuk

Now lets load this

Now at this point we have an external facing ftp site that we can connect to

However what's missing is a username/password to get into that - so far there isn't one - so lets add one

To do this we must be 'inside' the context of the container

kubectl exec -it ftp5-deployment-6c6d6586d9-bscdf /bin/bash

Once inside we then run this - which creates a new user called ukftp

root@aks-agentpool-40234749-0:/# pure-pw useradd ukftp -f /etc/pure-ftpd/passwd/pureftpd.passwd -m -u ftpuser -d /home/ftpusers/ukftp

Password:

Enter it again:

Now if we go back to our dos ftp window - we can login

And then upload a file (remember to set the binary option!)

Now if we go and look at the azure storage we can see the file i just uploaded

Now the file is here we can make use of it in other apps without needing to directly connect the systems - we can fetch from azure storage however we like.

And there we have it - a fully functional PaaS* ftp service in Azure. All of the files held securely in azure storage with all the backup/replication goodness that comes with that.

* - well it's pretty much PaaS isn't it? I've just glued together various bits of things without really ever creating my own app - i just configured other things - this is infrastructure as code - well it could be made to be.

Few other things to mention that may be relevant for you.

1) I tried to have 2 azure file shares - one for the files and another for the ftp user passwords - it didn't seem to be able to cope with more than one though

2) because of 1 - this means every container redeployment (or indeed additional pods) would need the ftp users adding

3) 1 should be fixable by making reference to the config file in another location - however i couldn't see an easy way to do that without changing the base docker image (even if that's just to change the run command line)

4) and this is the thing that had me stumped for ages - i the app deployment yaml you have to set this

hostNetwork: true

without that you just get these kind of errors

500 I won't open a connection to x.x.x.x (only to x.x.x.x)

If the passwords being centralised is fixed (as mentioned in point 1 above) then this can easily be made into a fault tolerant setup - you'd just deploy multiple pods and they should quite happily work all talking to the same central file share.

Thanks to Andrew for the docker image (https://github.com/stilliard)

Hope this is useful........

excellent... but where are the database blogs ?

ReplyDeleteCan you get TLS connection working?

ReplyDeleteTo decode the secrets in base64, there is another great alternative

ReplyDeleteurl-decode.com/tool/base64-decode with dozens of other web utilities as well.

This is a really authentic and informative blog. Share more posts like this.

ReplyDeleteDifference Between Cloud Computing And Big Data

Big Data in Cloud Computing