We've been looking into getting better control over our spending in Azure - as many of you may have found it's very easy to start spending a lot more than you intended - and this is true of all cloud providers - resources are so easy to add they get forgotten about or oversized or aren't shut down when not in use and the costs can really rack up.

Microsoft do offer some ways to help you manage this - Azure advisor recommends things to save costs, there is cost detail now in the 'normal' portal directly (so it's not just in the ea portal any more) and relatively recently the cloudyn toolset has been integrated into the portal. We have also plugged power BI into the ea rest api's which gives us some nice visualisation.

All of these things help and maybe between them all they can cover all the functionality we need but I was interested to see what OMS log analytics could offer as this seems to be a perfect kind of use case for what it can be used for.

So based on my previous triumph of finding a way to log data directly to log analytics (for those of you that missed that - which I'm sure is most of you - that detail is here )

Now all I had to do was find a way to get the billing data from the ea portal into a usable format that could be loaded into my OMS log creator - that should be easy right?

Well in the end the code isn't to long but it took me ages to figure this out.

I'm no powershell coder (as may become apparent from the code) so that made things take longer than they should and our on premise networking setup did its best to scupper me but in the end I got the code to work - I'll paste it here in nice format then I'll pick out some of the relevant parts to explain:

Function New-Signature ($customerId, $sharedKey, $date, $contentLength, $method, $contentType, $resource) { $xHeaders = 'x-ms-date:' + $date $stringToHash = $method + "`n" + $contentLength + "`n" + $contentType + "`n" + $xHeaders + "`n" + $resource $bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash) $keyBytes = [Convert]::FromBase64String($sharedKey) $sha256 = New-Object -TypeName System.Security.Cryptography.HMACSHA256 $sha256.Key = $keyBytes $calculatedHash = $sha256.ComputeHash($bytesToHash) $encodedHash = [Convert]::ToBase64String($calculatedHash) $authorization = 'SharedKey {0}:{1}' -f $customerId, $encodedHash return $authorization } Function Send-OMSData($customerId, $sharedKey, $body, $logType) { $method = 'POST' $contentType = 'application/json' $resource = '/api/logs' $rfc1123date = [DateTime]::UtcNow.ToString('r') $contentLength = $body.Length $signature = New-Signature ` -customerId $customerId ` -sharedKey $sharedKey ` -date $rfc1123date ` -contentLength $contentLength ` -method $method ` -contentType $contentType ` -resource $resource $uri = 'https://' + $customerId + '.ods.opinsights.azure.com' + $resource + '?api-version=2016-04-01' $headers = @{ 'Authorization' = $signature 'Log-Type' = $logType 'x-ms-date' = $rfc1123date 'time-generated-field' = "" } Write-Host $uri Write-Host $signature $response = Invoke-WebRequest -Uri $uri -Method $method -ContentType $contentType -Headers $headers -Body $body -UseBasicParsing return $response.StatusCode } #Get the Azure Billing Enrollment Number from ea.azure.com under Manage->Enrollment Number $enrollmentNo ="enrollment-number-from-ea-portal-here" #Get the Accesskey from ea.azure.com under Reports->Download Usage->API Access Key. Create a new Access key if needed. $accesskey = "really-long-access-key-from-ea-portal-here" $contentType = "application/json;charset=utf-8" $authHeaders = @{"authorization"="bearer $accesskey";"api-version"="1.0"} Try{ $uri = "https://consumption.azure.com/v2/enrollments/$enrollmentNo/usagedetails" Do { $rich = Invoke-RestMethod -Uri $uri -Headers $authHeaders -ContentType $contentType ForEach ($line in $rich.data ) { $outputter = ($line|ConvertTo-Json) Send-OMSData -customerId 'secret-key-for-oms-here' -sharedKey 'secret-pass-key-here' -body $outputter -logType 'BILL' } $uri = $rich.nextLink } until (!$uri) } Catch [System.Exception] { $loopLine = $_.InvocationInfo.ScriptLineNumber; $loopEx = $_.Exception; $errMessage = "Exception at line $loopLine message: $loopEx" Write-Host $errMessage -ForegroundColor Red } #end Catch

If you insert the relevant values for enrolment number, the secret key for ea along with the oms id and secret key and then run that block of code you'll get a row inserted for every day in the current month for every single resource in azure - this includes loads of properties including cost etc

So lets look at the code a little more to explain whats going on.

The first part of the block is 2 functions which I covered in that previous blog post so I wont go over that again - they just enable a 'row' to be written to OMS.

The second part is now the interesting part.

1) The first section just sets up some variables for use later on - including enrolment id and access key - this is essentially the username/password to be able to query the rest API of the EA portal

2) The try block then sets an initial uri to access which is then invoked with the invoke-restmethod function/cmdlet (whatever it is) - we pass into that the uri, the headers containing the authentication and the content type we want (in this case JSON)

3) This returns a chunk of data embedded in which is a 1000 line array of json data containing the 1000 'rows' we want to insert

4) we then loop through this initial batch converting each 'line' back into json format (the cmdlet has transparently converted the original json into a powershell object which we now want to change back) - we then put the data in the format we want into the $outputter variable

5) Now we call my send to OMS function passing in that nicely formatted json which is exactly in the format it wants to create the data in OMS - I nthis case I create a new log type called 'BILL' to make it easier to find.

6) The final part is to wrap the whole call in a loop so we can fetch the next array of 1000 and so on until there is no more data - this is achieved as one of the properties of the original array is a link to the next array ($rich.nextlink) - we then set $uri the that value go back to the start of the loop - invoke the rest api with this new url and then just repeat until nextlink is a null string - then we know we are complete.

After all of that lot we then have lots of nice data created - in my case it generated about 22000 rows for just 4.5 days of usage in March - so the volume can really ramp up

So that's all good you say - but show us something in log analytics

OK lets do that - I wrote some (very) basic queries just to illustrate the data is there but I still have to enhance this to make it pretty (and useful) and actually also maybe to add some billing alerts too.

So as a kick off as a basic one:

search * |where Type == "BILL_CL" |where date_t == '03/02/2018'|top 20 by cost_d |project product_s,cost_d

that looks like this ( I removed some of the 'useful' fields like subscription and resource groupas I don't want to advertise those

That shows the top 20 most expensive items from the 2nd of March

This next one shows the total spend per day in March

search * |where Type == "BILL_CL" |where TimeGenerated > ago (180m) |summarize sum(cost_d) by bin(date_t,1d)

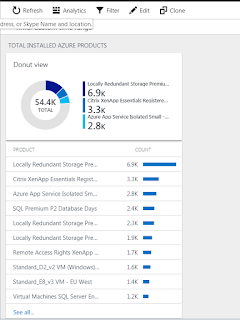

I then muddled my way through and created a donut and details view which looks like this - largely based on this query

search * |where Type == "BILL_CL" | summarize AggregatedValue = sum(cost_d) by product_s| order by AggregatedValue desc

Anyway - you get the idea - you can do pretty useful stuff with this once you have the data

Next step for me is I think to change this to pull down just 1 day at a time every day so I don't have duplicate data and have to work out how to filter that out.

I also then need to schedule my powershell script as some sort of automation along with taking out the hardcoded secret keys and doing something more secure with them

Once that's done I'll attempt some sort of alerting based on captured data

Follow up blogs to come (probably.....) or I might decide I can develop this as my own billing solution for Azure and retire off the profits.....

For reference the definition of what the json 'row' contains that is stored in log analytics - it's defined at the Microsoft link here:

https://docs.microsoft.com/en-us/rest/api/billing/enterprise/billing-enterprise-api-usage-detail#response

Good stuff, I'll be taking a look at this.

ReplyDeleteI've been manually dragging data down from the API and performing excel wizardry one it. not something I want to keep doing forever so I really need to apply the gray matter and come up with something better (tried cloudyn but it sucks)

ReplyDeleteThis is exactly what I needed. Thanks so much for posting!

ReplyDelete