Whilst messing around with datapump these past few weeks I came across a view that is used internally to decide what method can be used to unload the table - either 'direct path' (fast) or 'external table' (not so fast). I was interested to find out what criteria mean that a table has to revert to external table unload method. This switch can make a big difference to extract speed so i started doing a quick investigation into it.

The view in question is SYS.KU$_UNLOAD_METHOD_VIEW, this is a 3 column view which just displays an object id and 2 flag columns. One of these tells us the unload method and the other tells us if external table parallel unload can be used.

SYS@DB>desc sys.KU$_UNLOAD_METHOD_VIEW

Name Null? Type

----------------------------------------- -------- ----------------------------

OBJ_NUM NOT NULL NUMBER

UNLOAD_METHOD NUMBER

ET_PARALLEL NUMBER

The flag columns seem to have 2 distinct values each - by a process of elimination here is what they mean

UNLOAD_METHOD : 1=DIRECT_PATH , 2=EXTERNAL_TABLE

ET_PARALLEL (external table parallel) : 1=CAN , 2=CANT

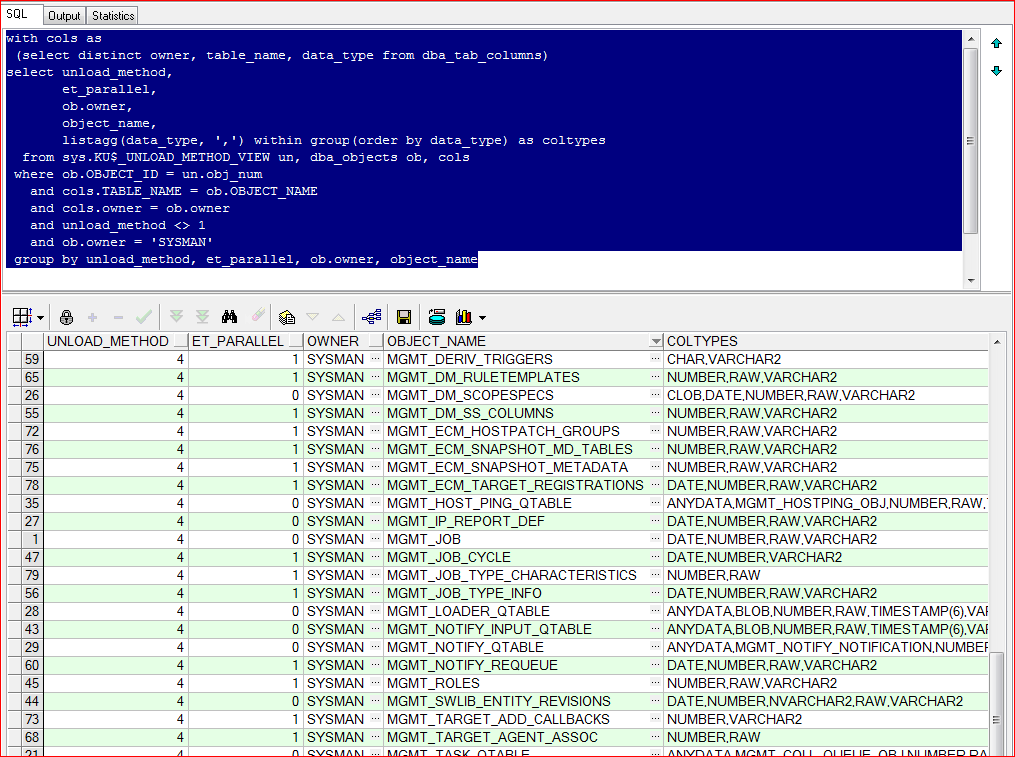

I decided that (from past experience) certain column types had restrictions and these must surely be the reason for the different types of unload being allowed. I then created a quick query to check out that theory

with cols as

(select distinct owner, table_name, data_type from dba_tab_columns)

select unload_method,

et_parallel,

ob.owner,

object_name,

listagg(data_type, ',') within group(order by data_type) as coltypes

from sys.KU$_UNLOAD_METHOD_VIEW un, dba_objects ob, cols

where ob.OBJECT_ID = un.obj_num

and cols.TABLE_NAME = ob.OBJECT_NAME

and cols.owner = ob.owner

and unload_method <> 1

and ob.owner = 'SYSMAN'

group by unload_method, et_parallel, ob.owner, object_name

This just gives a summary of the different types of column in each table and the type of extract that is possible (don't you just love that listagg function).

This gives results as shown below for a random selection of the schema i was looking into (SYSMAN from a 12.1.0.3 cloud control install)

Now some of those column types you think - yep OK i can maybe accept that - the likes of LOBS, weird user defined types etc. However some of the columns look pretty normal and there seems to be no reasonable explanation....

One table in particular just had number columns - EM_ASREPLAY_REPLAY_STATS

I then started to dig a little deeper - what was unusual about this table? After much digging around i was none the wiser - it looked perfectly normal.

Then i realised - why don't i just look at the view definition of the 'unload' view - maybe that reveals all?

And indeed it does!

Here is the view text in full - and it actually has really nice comments to explain what is going on

select t.obj#,

decode (

-- Condition 1: Table has FGAC for SELECT enabled?

(select count(*) from rls$ r where r.obj#=t.obj#

and r.enable_flag=1 and bitand(r.stmt_type,1)=1)

+ -- Condition 2 and 3: Encrypted cols or queue table?

bitand(t.trigflag, 65536+8388608)

+ -- Condition 4a: BFILE columns?

bitand(t.property, 32768)

+ -- Condition 4b: Opaque columns?

(select count(*) FROM opqtype$ o where o.obj# = t.obj#)

+ -- Condition 5: Cols of evolved types that need upgrading?

(select count(*) FROM coltype$ c where c.obj#=t.obj# and

bitand(c.flags,128)>0)

+ -- Condition 6: Any LONG or LONG RAW columns that are not last?

(select count(*) from col$ c where c.obj#=t.obj# and c.type# IN (8,24)

and c.segcol# !=

(select MAX(c2.segcol#) from col$ c2 where c2.obj#=t.obj#) )

+ -- Condition 7: Columns with embedded opaques?

(select count(*) from coltype$ c, type$ ty where c.obj#=t.obj# and

c.toid=ty.toid and bitand(ty.properties, 4096) > 0)

+ -- Condition 8: table with column added that has NOT NULL and

-- DEFAULT VALUE specified

(select count(*) from ecol$ e where e.tabobj# = t.obj#)

+ -- Condition 9: target is 10g instance and table contains subtype,

-- sql_plan_allstat_row_type. This subtype does not exist in 10.2.

(select count(*) from subcoltype$ sc where sc.obj# = t.obj# and

sc.toid = '00000000000000000000000000020215' and

dbms_metadata.get_version < '11.00.00.00.00')

, 0, 1, 4),

--

-- NOTE: The values 1 and 4 from the decode above correspond to the constants

-- prefer_direct and require_external from the package kupd$data_int defined in

-- datapump/dml/prvthpdi. If these values ever change in the package, they must

-- be changed here as well. Can't use pkg's constants because catmeta executes

-- before pkg header is installed.

--

--

-- Ext. Tbls. cannot unload in parallel if:

-- 1. FGAC (row level security) is enabled (Why? see comment below...)

-- 2. It's a nested table

-- 3. Table contains a LOB (possible with columns of type adt, refs,

-- varray or lob).

--

-- The data layer must execute as invoker's rights for unload on FGAC-enabled

-- tables so the security of the caller is enforced (security hole if SYS

-- as definer unloaded the table). But, kxfp processes started in response to

-- a parallel ET unload would also run as the unprived invoker and they then

-- fail calling our internal definer's pkg's like queueing and file mgt.

-- Forcing parallel=1 in this case stays in the context of the worker process

-- which *can* see the internal pkgs because they share the same owner (SYS).

--

decode (

(select count(*) from rls$ r where r.obj#=t.obj# and r.enable_flag=1)

+

bitand(t.property, 2+8+16+8192+262144)

, 0, 1, 0) -- 1: Can do ET parallel unload 0: Can't

from tab$ t

So it seems there are a number of odd restrictions that are not immediately obvious (and to be honest wouldn't be obvious after a lifetime of investigation into this...) I won't list them all again - the view text is pretty self explanatory - i'll just pick out the one that was confusing me as this is perhaps one of the most obscure ones there.....

In my case i hit 'Condition 8' - the table seems to have had not null columns added with a default value - there was an optimization introduced for this exact case to make the column addition 'quick' - however it seems to have the downside that it then breaks direct_path unload (and it does something else weird too that we hot on our datawarehouse - though frustratingly i can't remember the exact circumstances)

Other interesting ones are - tables with a long column where it's not the 'last' column and anything with fine grained access control.

So some intersting gotchas along the way - if you've very big tables with some of these criteria then maybe it's something to look at more closely

The other interesting part is the parallel part at the end, anything with FGAC (again), lobs or nested tables can't be done.

The lobs one is the killer for us - with big tables of lobs performance is much slower than it could be. We get speed up by partitioning these tables - as we can unload multiple partitions at the same time even if the individual partition is external table and noparallel.

Oh - and one final thing. If you try and force datapump to unload with DIRECT_PATH when it can't do it - it will just throw an error - see below

expdp / tables=sysman.mgmt_job access_method=DIRECT_PATH

Export: Release 11.2.0.3.0 - Production on Wed Jul 16 18:28:28 2014

Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production

With the Partitioning option

Starting "ORACLE"."SYS_EXPORT_TABLE_01": /******** tables=sysman.mgmt_job access_method=DIRECT_PATH

Estimate in progress using BLOCKS method...

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

ORA-31696: unable to export/import TABLE_DATA:"SYSMAN"."MGMT_JOB" using client specified DIRECT_PATH method

Total estimation using BLOCKS method: 128 KB

Processing object type TABLE_EXPORT/TABLE/TABLE

Processing object type TABLE_EXPORT/TABLE/RLS_POLICY/RLS_POLICY

Processing object type TABLE_EXPORT/TABLE/INDEX/INDEX

Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT

Processing object type TABLE_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS

Processing object type TABLE_EXPORT/TABLE/TRIGGER

Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

Processing object type TABLE_EXPORT/TABLE/STATISTICS/USER_PREF_STATISTICS

Processing object type TABLE_EXPORT/TABLE/POST_INSTANCE/PROCACT_INSTANCE

Processing object type TABLE_EXPORT/TABLE/POST_INSTANCE/PROCDEPOBJ

Master table "ORACLE"."SYS_EXPORT_TABLE_01" successfully loaded/unloaded

******************************************************************************

Dump file set for ORACLE.SYS_EXPORT_TABLE_01 is:

/oracle/11.2.0.3.1.DB/rdbms/log/expdat.dmp

Job "ORACLE"."SYS_EXPORT_TABLE_01" completed with 1 error(s) at 18:28:41

Hope this is useful.......

Great !

ReplyDeleteThnks