To further expand our cloud control as a CMDB 'concept' i wanted to be able to gather a list of all the schemas we have in all of our databases. This then allows us to better manage everything. In a lot of cases we have multiple schemas in the same database (who needs multitenant huh...? :-))

Anyway i took a look at the metrics already gathered by cloud control and In the hundreds/thousands of DB metrics that seem to be gathered i couldn't find one which actually collected the schemas so i went about creating my own - which I'll explain below. However i later found there was a built in one that could do this and i decided to use that instead - which I'll come on to later. I thnk it's useful to explain how the manual one works as it's a technique that can be re-used for other checks.

First up we navigate to Enterprise->monitoring->metric extensions

Then we fill in some basic info

Then a simple query to fetch schema name and a count of the tables it owns

Then define 2 columns to hold the results of the metric - note i define the schema name as the 'key' column and the count as a data column

So we have this on the column summary screen

Use default credentials

Now do a test against a db - and we get results just fine

Now review everything

And it's created

So now we have this metric created and we want to 'send' it to all our agents to run on database targets - we have to:

Actions-> save as deployable draft

Actions-> publish

Actions ->deploy to targets

And then you can shoose where you want to send it. Now at this point i decided i didn't want to publish it that way (directly) - i wanted to attach it to a template and let the template deploy it. In earlier posts I've demoed how to set up admin groups so when new targets are added they automatically get assigned to a group and given the correct templates.

So i went about doing it that way.....

This is when i noticed that all of the previous screenshots i took were irrelevant for what i wanted to do (i've included the process anyway as i wasn't going to waste all that effort - and it is still useful)

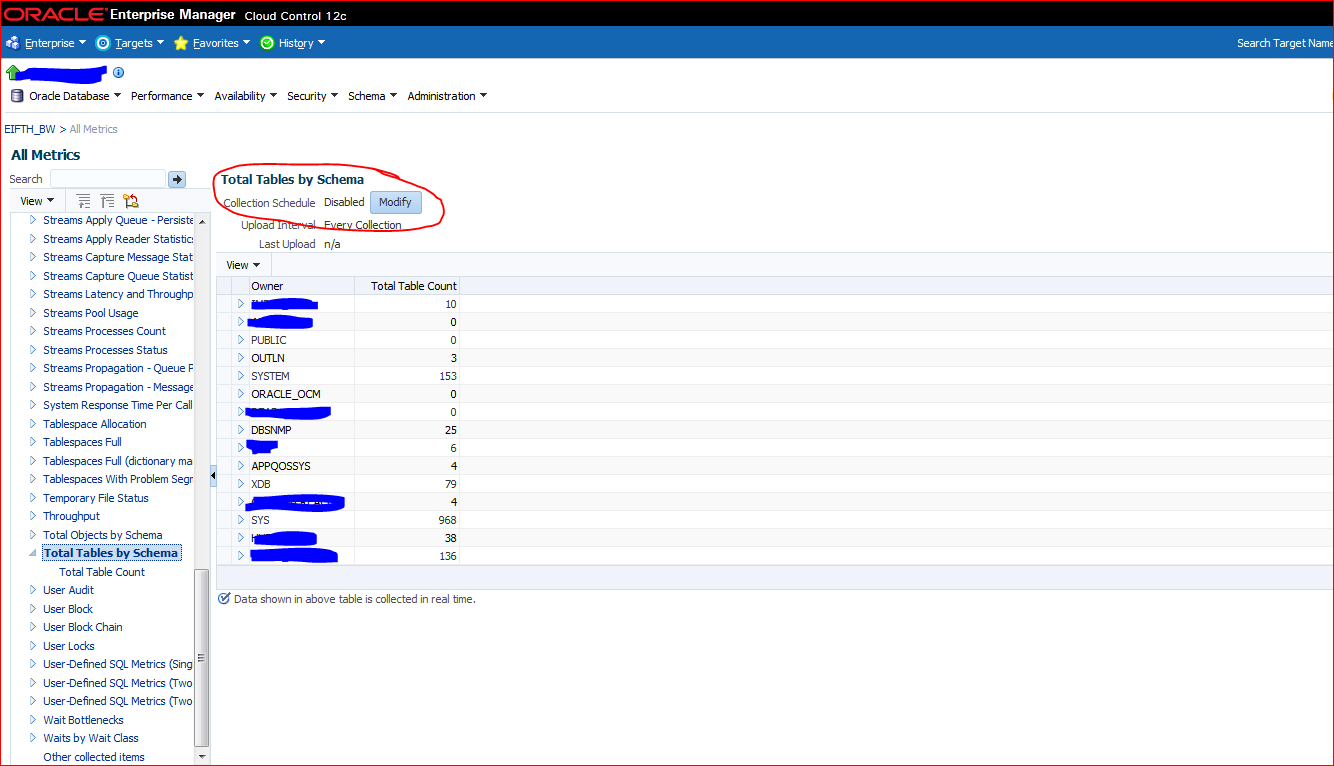

Now i don't think this was there in 12.1.0.3 (when i first started looking at doing this) - but it is there in 12.1.0.4. Maybe i missed it and it always was there - don't know now...... What i actually needed to do was just 'activate' a metric called 'total tables by schema' - see screenshot below.

This actually does even more than i wanted - it also gives 'schemas' that own no tables (so must have some sotored pl/sql for example). As you can see by default this metric is disabled so will not be collected - it can only be seen because of the 'real time' view of the metric where it is collected when you click on the screen.

OK - so now i have a metric that can collect what i want now i just need to set it active.

Now again you can just set this individually should you wish - but for me i need to change the template so everything (including new targets) picks this up.

I've talked about admin groups here so i won't go over that again - but what i did was to add an 'enabled' version of this single metric to the monitoring template already asociated with the admin groups - this then gets auto published to all the targets (or it can be pushed if you don't want to wait).

Note - a single enabled metric overrides the default disabled metric for the database default template in this case

OK - we're nearly there. Once this is pushed out the agent is happily collecting this data and uploading it to the OMR database. Now we just need to query it to see our schema list...

Now as I've done this before i knew where to look - but tracking down the right tables/views to use can at first be a little daunting

The basic query is this

SELECT mt.target_name as "Database Name",

KEY_PART_1 as "Schema Name",

mv.value as "Number of Tables",

mv.collection_time

FROM sysman.GC_METRIC_VALUES mv, MGMT_TARGETS MT

WHERE ENTITY_GUID = MT.Target_Guid

AND METRIC_COLUMN_NAME = 'table_count'

Which gives you the data directly from the raw upload

I then expand that further to exclude some known accounts and to only display data for my 'live' systems based on data i populated already - see here for how i did that

I then end up with this query

with envtype as

(select tgt.target_name, tp.property_value

from sysman.GC$TARGET_PROPERTIES tp, sysman.gc$target tgt

where property_name = 'orcl_gtp_line_of_bus'

and tp.target_guid = tgt.target_guid

and tgt.target_type = 'oracle_database')

SELECT mt.target_name as "Database Name",

KEY_PART_1 as "Schema Name",

mv.value as "Number of Tables",

mv.collection_time,

env.property_value as "Environment type"

FROM sysman.GC_METRIC_VALUES mv, MGMT_TARGETS MT, envtype env

WHERE ENTITY_GUID = MT.Target_Guid

AND METRIC_COLUMN_NAME = 'table_count'

and env.target_name = mt.target_name

and env.property_value = 'LIVE'

and key_part_1 not in (select user_name from sys.DEFAULT_PWD$)

and mv.value <> 0

and key_part_1 not in ('SQLTXPLAIN',

'OEMUSER',

'OJVMSYS',

'ORA_TIV',

'OEM_USER',

'OPS$ORACLE',

'ORA_AUD')

and key_part_1 not like 'APEX%'

and not regexp_like(key_part_1, '^([A-Z]{1}[0-9]{4,5})')

Couple of little tricks with the query

1) Use the with clause to get the list of environments built - esier to read having it split out

2) Get a list of 'built in' accounts from the sys table default_pwd$ for exclusion- i don't care about oracle supplied schemas

3) Use a regular expression to remove some of the accounts I'm not interested in - these are very powerful but often confusingly documented - it took me a while to get the above statement correct - some of the [:alpha:] style syntax you could use did not seem to work as expected

So here is the output

So i now have an up do date list of all the schemas that exist in all of my live databases - and the work involved to collect that (and keep it up to date) is actually minimal.

Cloud control shows it's worth yet again.

Comments

Post a Comment